To get future blog posts in newsletter form, you can sign up here:

Making Event Data From Scratch: A Step-By-Step Guide

This tutorial covers how to create event data from a new set of text using existing Open Event Data Alliance tools. After going through it, you should be able to use the OEDA event data pipeline for your own projects with your own text.

The future of forecasting: the good and the bad

Science has a special issue this month on forecasting political behavior, which included an essay by Cederman and Weidmann in which they discuss the limitations of current conflict forecasting models, as well as the areas where they’re better than many people, including political science scholars, think they are.

CAMEO Dictionary Coverage

I was going through the Petrarch2 dictionary code, working on live updating of dictionaries for the human coding interface, and ended up taking a look at the dictionaries’ coverage of different CAMEO event codes.

Three Tasks in Automated Text Geocoding

I’ve been working a lot of automated geocoding of text over the last 6 months, and I’ve found myself consistently describing the same set of tasks or ways to extract location information from text.

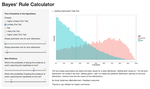

The Good Judgement Project and Bayes' Calculator

It’s been a very forecast-y week. Between (finally) reading Phil Tetlock’s excellent Expert Political Judgement, going to a half dozen panels at ISA on forecasting and event data, and today’s NPR story about the Good Judgement Project, I’ve been thinking a lot about how to make political forecasts and how we know when they’re good.