Managing Machine Learning Experiments

Image credit: NASA

Image credit: NASA

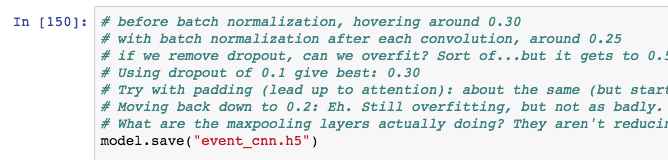

Reproducible methods like knitr and version control using git are on their way toward being standard for academic code, even in social science disciplines such as political science. knitr, Rmarkdown, and Jupyter notebooks make it easy to verify that your findings and figures come from the most recent version of your code and that it runs without errors. Putting your research code in git ensures that your changes are deliberate and that you can go back to recover your code at any previous point. This is all very important for ensuring that your final analysis is accurate before publication. The tools to version, track, and organize experiments on machine learning models are much less well developed, in my experience. A system for organizing experiments needs to store the parameters, code, and data used in each model and record the trained model and its performance. This information should make it clear where results came from and how to reproduce the results in the future. This all came to a head for me this weekend, as I worked on a paper for my dissertation on linking events in text with the locations where they occur using a neural nets in Keras. I was tracking my ML experiments in stickies and scattered Jupyter notebooks, which is a bad way to do science. Keeping notes like “changed dropout to 0.5: f1 score dropped to 0.48. Better with second dataset” was becoming unmanageable and risked leading me away from real model improvements. After looking at a few existing approaches ( this was particularly interesting), I decided my needs were different enough that I should roll my own organizational system for experiments.

The basic organizational principle of this system is that each experiment is

defined by a folder that includes all the configuration parameters needed to

run the model and stores all the output and evaluation for each model. In

contrast to existing data science project organizational schemes, this

structure puts much more emphasis on tracking experiments than organizing the

whole rest of the code (which I keep in an existing organization structure in a

Github repo). Each experiment folder starts with a config file that contains

information about the location of data to use and model parameters. Optionally,

it can include a custom model that can be imported and used instead of a

default model. notes.txt is completely manual and is where I record what I

was thinking or trying to figure out with the experiment (e.g. “what happens if

we use a sigmoid activation for the dense layers?")

[caption id="attachment_231” align="alignnone” width="630”] A better way to do it[/caption]

A better way to do it[/caption]

experiments/

├── exp_1/ |

├── config.txt |

├── custom_model.py (optional)

└── notes.txt

├── exp_2/

...

The main code is in the directoy above experiments and takes as its only argument the location of this folder: python event_model.py -i experiments/exp_8/ Inside the config.txt file are the parameters I could potentially change:

[Data]

use_cache = True

cache_loc = /Users/ahalterman/MIT/Geolocation/formatted.pkl

minerva_dir = /Users/ahalterman/MIT/Geolocation/minerva_labeled/labeled/

prodigy_dir = /Users/ahalterman/MIT/Geolocation/event_geolocation_manual.jsonl

[Model]

nlp_model = en_core_web_sm

filter_size = 128

epochs = 15

conv_dropout = 0.4

conv_activation = relu

dense_dropout = 0.4

dense_activation = relu

dense_units = 1024 batch_size = 56

early_stopping = True

patience = 4

This config file is parsed in the main code with ConfigParser and each parameter is slotted in to its appropriate place in the code.

model = make_CNN_model(X, Y,

filter_size = int(config['Model']['filter_size']),

conv_dropout = float(config['Model']['conv_dropout']),

conv_activation = str(config\['Model'\]\['conv_activation'\]),

...

After running, the main code writes out the results of the experiment to the same folder, keeping everything nice and tidy together:

experiments/

├── exp_1/ |

├── config.txt |

├── custom_model.py |

├── notes.txt |

├── run_info.txt |

├── model_diagram.pdf

└── CNN_event.h5

├── exp_2/ ...

model_diagram.pdf is the Keras diagram of the model, which is useful as a

quick reference or for slides. It also saves the model itself. run_info.txt

is the heart of the experimental output. One function in my main code takes in

various metrics and information from the run and writes it out to a text file.

Specifically, it reports the training history of the model and the precision

and recall of the model under various cutpoints (I’m generating token-level

labels so these are useful metrics for me).

Model ran at 2018-03-03 21:43:18 using code with latest commit '17d84703d69694ea0652a1a05964b35b2dc6278d'.

Custom model: True

Total training data was 4010 examples, with 566 features.

Evaluated on 1686 evaluation examples.

Precision/recall metrics: cutpoint precision recall div_zero_perc

0 0.01 0.162429 0.969084 0.000593

1 0.05 0.279618 0.903985 0.001186

2 0.10 0.379355 0.850579 0.001779

3 0.20 0.499955 0.748342 0.009490

4 0.30 0.564474 0.672170 0.024911

5 0.40 0.584063 0.604540 0.065243

6 0.50 0.540421 0.514433 0.128707

7 0.60 0.467949 0.428728 0.206406

Training history:

categorical_accuracy loss val_categorical_accuracy val_loss

0 0.028055 0.117838 0.013716 0.132258

1 0.027120 0.087719 0.027431 0.119623

2 0.024938 0.085464 0.024938 0.138993

3 0.025873 0.081080 0.024938 0.090611

4 0.029302 0.080911 0.023691 0.108950

5 0.038965 0.078171 0.043641 0.081255

6 0.057045 0.073384 0.082294 0.073213

7 0.096633 0.067497 0.147132 0.080519

The output file also includes the latest commit of the code: this makes it

possible to regenerate the results even weeks later after many changes to the

code. This only works. of course, if the version of the code used to run

actually matches an existing git commit. A few lines of code in my main

event_model.py makes sure there are no uncommitted changes to the code and

makes it irritating to continue if there are:

git_status = str(subprocess.check_output(['git', 'status']).strip())

if bool(re.search("event_model.py", git_status)):

print("You have uncommitted changes to `event_model.py`. Please commit them before proceeding to ensure reproducibility.")

quit = input("Type 'testing' to continue or anything else to quit: ")

if quit != "testing":

print("Bye!") sys.exit(0)

All of the relevant parts of the code is available here in case you’d like to implement something similar.